In the ever-shifting landscape of modern warfare, the battlefield has expanded from physical borders to the vast, borderless expanse of the digital realm. As terrorist organizations increasingly exploit cyberspace for propaganda, recruitment, and coordination, Pakistan’s security and intelligence agencies are responding with a sophisticated arsenal of their own: Open-Source Intelligence (OSINT) and AI-based surveillance.

This strategic pivot towards a digital battlefield is a critical evolution in the nation’s counter-terrorism efforts, aiming to preemptively neutralize threats before they can materialize. The government’s heightened focus on digital security, underscored by recent amendments to the Prevention of Electronic Crimes Act (PECA), makes the deployment of these technologies a timely and highly relevant topic for critical analysis, balancing operational effectiveness with complex ethical considerations.

OSGINT: A powerful open-source intelligence (OSINT) tool designed to gather valuable information about GitHub users.

GitHub: https://t.co/DTS8eWi1r3 pic.twitter.com/VF09LoxcWi

— Dark Web Informer (@DarkWebInformer) August 2, 2025

Deploying OSINT and AI in Surveillance

The modern terrorist threat is no longer confined to traditional networks; it thrives on social media platforms, encrypted messaging services, and the dark web. In this environment, OSINT has become an indispensable tool. Pakistan’s intelligence apparatus is actively monitoring publicly available information from social media platforms like Facebook, X (formerly Twitter), and Telegram.

Analysts meticulously sift through vast amounts of data, including posts, videos, and images, to identify patterns of radicalization, detect coded messages, and map out the digital footprint of extremist groups. This process allows security agencies to piece together a comprehensive picture of a threat landscape that was once hidden from view.

Complementing OSINT are advanced AI-based surveillance systems. These technologies, including facial recognition and predictive analytics, are being integrated into the security infrastructure. AI-powered facial recognition systems, deployed in public spaces and at checkpoints, can cross-reference live feeds with databases of known suspects, providing real-time alerts.

You May Like To Read: U.S. Designates Majeed Brigade as Terrorist Organization

Simultaneously, predictive analytics tools analyze behavioral data, communication patterns, and geographical movements to forecast potential terrorist hotspots or identify individuals at risk of radicalization. This predictive approach is a significant departure from traditional reactive measures, offering the promise of averting attacks before they happen by identifying potential threats based on data-driven models. The government’s push for digital security and the legal framework provided by PECA have enabled a more aggressive and centralized approach to deploying these technologies.

Analyzing Effectiveness and Ethical Implications

The effectiveness of these technologies in combating terrorism is a subject of intense debate. Proponents argue that OSINT and AI offer unprecedented speed and scale in identifying threats. By leveraging big data, security agencies can uncover connections and plots that would be impossible for human analysts to detect alone.

The ability to monitor online chatter and use predictive models can lead to the successful disruption of terrorist cells and the prevention of attacks, thereby saving lives. For a nation that has borne the brunt of terrorism for decades, these tools represent a strategic advantage that could turn the tide.

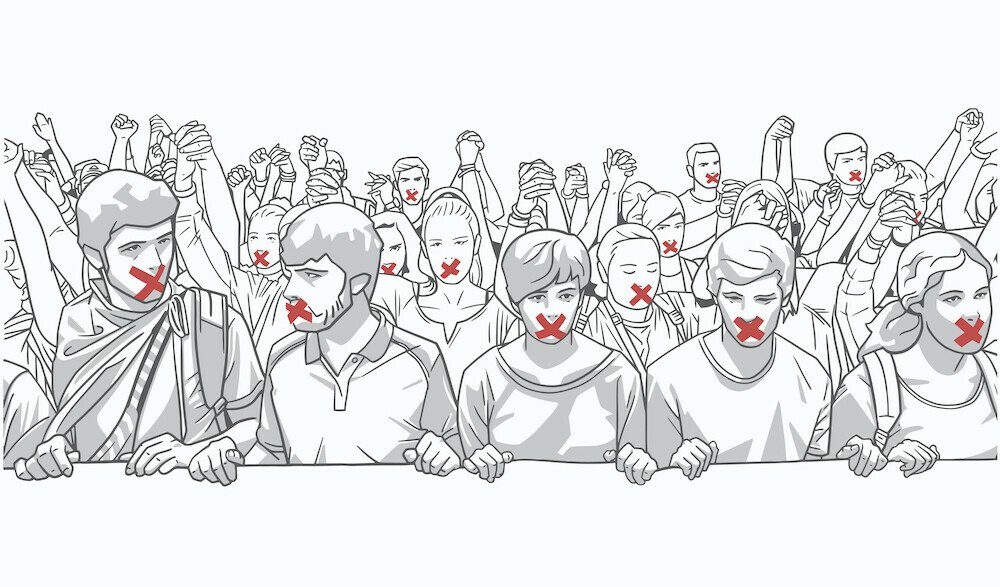

However, the deployment of such powerful surveillance tools comes with a host of profound ethical implications. The use of OSINT and AI-based surveillance, particularly without robust oversight, can lead to serious violations of privacy and civil liberties. The collection of vast amounts of personal data from social media and public spaces raises concerns about mass surveillance and the potential for abuse.

Critics argue that the line between monitoring genuine threats and infringing on the rights of ordinary citizens is dangerously thin. Facial recognition technology, for instance, has been shown to have racial and gender biases, leading to potential misidentification and the disproportionate targeting of certain communities.

Similarly, predictive analytics can reinforce existing biases, leading to the wrongful profiling of individuals based on their digital behavior rather than concrete evidence. The recent amendments to PECA, which have been criticized for expanding the government’s power to monitor online content and prosecute individuals for digital speech, have only amplified these concerns. There is a fear that these tools, intended for counter-terrorism, could be weaponized to suppress dissent and political opposition, transforming a security measure into a tool for social control.

You May Like To Read: Jaffar Express Derails in Mastung District Following Railway Track Blast

PECA and Public Trust

The legal framework governing this digital battlefield is a crucial aspect of the debate. The amendments to PECA are a key development, reflecting the government’s recognition of the need for legal authority to operate in the digital space. However, they have been met with significant criticism from human rights activists and legal experts.

The broad and vaguely worded provisions in the law are seen as a potential threat to freedom of expression and the right to information. While the government maintains that these measures are essential to combat terrorism and online misinformation, the lack of transparency and judicial oversight in their implementation raises serious questions about their fairness and legality.

The success of a digital counter-terrorism strategy ultimately hinges on public trust. If the populace views these technologies not as tools for their protection but as instruments of state control, the entire endeavor could be undermined. A breakdown of trust could lead to public resistance, hindering intelligence gathering and creating a climate of fear.

The government, therefore, faces a delicate balancing act. It must demonstrate that the use of OSINT and AI is targeted, proportionate, and governed by a strong, independent oversight mechanism. Without a clear and transparent legal and ethical framework, the digital battlefield, while effective in some respects, risks eroding the very democratic values it is meant to protect. In the end, the true measure of success will not just be the number of terrorist plots thwarted but the ability to do so while safeguarding the fundamental rights and freedoms of a digitally-connected society.